European Commission presents Roadmap for effective and lawful access to data for law enforcement - 24 June 2025

https://home-affairs.ec.europa.eu/news/commission-presents-roadmap-effective-and-lawful-access-data-law-enforcement-2025-06-24_en

#dataretention #lawfulinterception #digitalforensics #encryption #ai

L’EPFL et le CHUV font partie d’un partenariat Afrique-Union européenne qui vise à déployer une application basée sur l’IA pour un diagnostic de la tuberculose plus accessible et plus abordable.

Nederland wil in Groningen een AI-fabriek bouwen, met SURF als partner. Deze moet onderzoekers, overheden, bedrijven en start-ups toegang geven tot krachtige rekenkracht, expertise en betrouwbare data om AI-toepassingen te ontwikkelen.

De fabriek krijgt een supercomputer, werkt met veilige Europese databronnen en brengt experts samen.

We zijn trots op het voorstel en kijken uit naar de beslissing van EuroHPC in september.

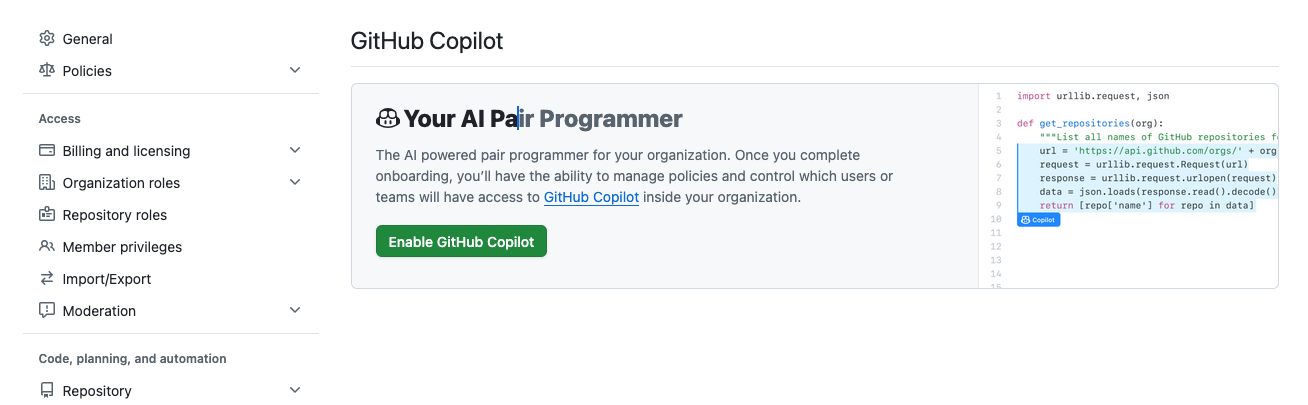

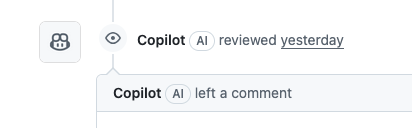

In a private #Github organization, in a private repo filled with NDA code, Github decided that to automatically start reviewing that code using Copilot.

Mind you, Copilot is disabled for this organization.

Could we please just fucking not ?! Not even mentioning the fact that the Github organization didn't enabled this, there is no data policy to be found in sight. I have no clue what Copilot does with the data after it "reviewed" the code and I could potentially be breaking the signed NDA.

#AI #Github #Copilot

Every time you use a server-based LLM, you hasten the fall of civilization due to climate change.

I wish I were being hyperbolic.

https://www.frontiersin.org/news/2025/06/19/ai-prompts-50-times-more-co2-emissions

"CC signals are a proposed framework to help content stewards express how they want their works used in AI training—emphasizing reciprocity, recognition, and sustainability in machine reuse. They aim to preserve open knowledge by encouraging responsible AI behavior without limiting innovation."

Note that the propoal "social contract" does not include a signal for content providers to say they don't want their work used in AI training What's with that?

(I was going to file an issue on it but as usual @mcc is ahead of me. Here's the link again ... if you also think this is a problem it might be useful to upvote it and perhaps add a comment.]

Hilariously, their list of concepts that the project "draws inspiration" from starts with "consent". Indeed, the full reportThe full report (PDF) says that "We believe creator consent is a core value of and a key component to a new social contract." I believe that too, I just don't see how the actual proposal aligns with that.

UPDATE: discussion on Github suggests that this proposal builds on an IETF proposal, which does have a way to specify this -- but takes no position on the default, if it's not specified. So that's somewhat better, but it's still opt-out -- and opt-out isn't real consent. More details in the reply at https://infosec.exchange/@thenexusofprivacy/114748419834505739

I learned something today: Google's Gemini "AI" on phones accesses your data from "Phones, Messages, WhatsApp" and other stuff whether you have Gemini turned on or not. It just keeps the data longer if you turn it on. Oh, and lets it be reviewed by humans (!) for Google's advantage in training "AI" etc.

But this only came to my attention because of an upcoming change: it's going to start keeping your data long-term even if you turn it "off": "#Gemini will soon be able to help you use Phone, #Messages, #WhatsApp, and Utilities on your phone, whether your Gemini Apps Activity is on or off."

This is, of course, a #privacy and #security #nightmare.

If this is baked into Android, and therefore not removable, I'd have to say I'd recommend against using Android at all starting July 7th.

#spyware#AI#LLM#Google #spying #phone#Android #private #data

Holy #surveillance hell, Batman.

Let me get this straight:

First, they feed your video, which is already stored in their cloud, into an #AI transformer to write descriptions.

Then they feed your descriptions into a pattern learning system (ML, maybe?) to figure out your patterns and habits.

All of this is stored in the cloud. So they not only have your video, but a narrative about your habits, ready to be exfiltrated, monetized, and shared with law enforcement.

#ai #enshittification#RingCamera

https://www.theregister.com/2025/06/25/amazons_ring_ai_video_description/

Today sounds like an excellent time to bring up Nightshade.

🐝 Dating platform #Bumble sends user data to #OpenAI without their consent. We have therefore filed a GDPR complaint against the company.

📰 Read more on our website: https://noyb.eu/en/bumbles-ai-icebreakers-are-mainly-breaking-eu-law

At any rate, here's the signals in the @creativecommonsCC Signals proposal. My initial reaction these seem reasonable enough as far as they go ... it's just that they don't include the ability to say "no"

Credit: You must give appropriate credit based on the method, means, and

context of your use.Direct Contribution: You must provide monetary or in-kind support to the Declaring Party for their development and maintenance of the assets, based on a good faith valuation taking into account your use of the assets and your financial

means.Ecosystem Contribution: You must provide monetary or in-kind support back to the ecosystem from which you are benefiting, based on a good faith valuation taking into account your use of the assets and your financial means.

Open: The AI system used must be open. For example, AI systems must satisfy the Model Openness Framework (MOF) Class II, MOF Class I, or the Open Source AI Definition (OSAID).

And btw here's @creativecommonssupporters page, which thanks (among others) Microsoft, Google, the Chan Zuckerberg Foundation. Amazon Web Services, and Mozilla (which has pivoted to become an AI company these days).

Huh.

Not to be cynical or anything, but I wonder if that has anything to do with the reason they don't think content creators should be able to say "no" to #AI?

"CC signals are a proposed framework to help content stewards express how they want their works used in AI training—emphasizing reciprocity, recognition, and sustainability in machine reuse. They aim to preserve open knowledge by encouraging responsible AI behavior without limiting innovation."

Note that the propoal "social contract" does not include a signal for content providers to say they don't want their work used in AI training What's with that?

(I was going to file an issue on it but as usual @mcc is ahead of me. Here's the link again ... if you also think this is a problem it might be useful to upvote it and perhaps add a comment.]

Hilariously, their list of concepts that the project "draws inspiration" from starts with "consent". Indeed, the full reportThe full report (PDF) says that "We believe creator consent is a core value of and a key component to a new social contract." I believe that too, I just don't see how the actual proposal aligns with that.

UPDATE: discussion on Github suggests that this proposal builds on an IETF proposal, which does have a way to specify this -- but takes no position on the default, if it's not specified. So that's somewhat better, but it's still opt-out -- and opt-out isn't real consent. More details in the reply at https://infosec.exchange/@thenexusofprivacy/114748419834505739

At any rate, here's the signals in the @creativecommonsCC Signals proposal. My initial reaction these seem reasonable enough as far as they go ... it's just that they don't include the ability to say "no"

Credit: You must give appropriate credit based on the method, means, and

context of your use.Direct Contribution: You must provide monetary or in-kind support to the Declaring Party for their development and maintenance of the assets, based on a good faith valuation taking into account your use of the assets and your financial

means.Ecosystem Contribution: You must provide monetary or in-kind support back to the ecosystem from which you are benefiting, based on a good faith valuation taking into account your use of the assets and your financial means.

Open: The AI system used must be open. For example, AI systems must satisfy the Model Openness Framework (MOF) Class II, MOF Class I, or the Open Source AI Definition (OSAID).